如果你读任何 (C)Python 源码分析的书或者文章,里面都会讲 Python 的 ceval.c 中有一个大 switch,会根据不同的 opcode 跳到相应的 case 去执行字节码。甚至 Anthony Shaw 的新书《CPython Internals》也是这么讲的。

然而实际上,CPython (在大部分情况下)早就不这么做了。这篇文章会解释为什么。下文中 Python 均指 CPython。

分支预测

为了解释清楚整个过程,我们必须从底层讲起。分支预测(Branch Prediction)是 CPU 必备的功能。准确的分支预测能大大提高指令流水线的性能,反之则导致性能损耗。

Python 刚发明的时候,每一个 bytecode 的确需要进行一次 switch 跳转。而 C 语言的 switch 语句会导致分支预测不准,因为 CPU 无法预测程序会跳到哪个 case(下一个指令)。如果不进行优化,每执行一条字节码指令都将导致一次分支预测错误,可以想象对性能的损耗有多大。

优化技巧

问题的关键就在于 switch...case 的跳转是个一对多的情况。想象你面对一道有十个选项的选择题,你不会知道哪个是正确答案。然而如果有十道题各一个选项,答案就都确定了。Python 采用的优化业无非也就是这么回事。

Python 用了一种叫做 computed goto 的技巧,依赖于编译器的 label as value 功能。详细原理大家就看链接里的解释吧。总之效果就是,可以把解释器主循环中的 switch...case 替换成 while loop,在每个 Python 的指令处理完之后,显示地用 goto 跳转到下一条指令。大概长这样:

int interp_cgoto(unsigned char* code, int initval) {

/* The indices of labels in the dispatch_table are the relevant opcodes

*/

static void* dispatch_table[] = {&&do_op1, &&do_op2, &&do_op3};

#define DISPATCH() goto *dispatch_table[code[pc++]]

int pc = 0;

int val = initval;

DISPATCH();

while (1) {

do_op1:

return val;

do_op2:

val++;

DISPATCH();

do_op3:

val--;

DISPATCH();

}

}

每个 goto 都是一次跳转,并且只有一个目标(下一条指令),这就让分支预测变得容易,从而性能也获得了极大提升(根据 Python 的注释,提升可达 15% ~ 20%)。

实现

下面讲一下 Python 是如何实现上面提到的优化的。

编译中判断要不要开启 computed goto

这部分。。非常蛋疼。我花了不少时间试图弄清楚,最后还是放弃了。这个回答里记录了我的一些发现。总之大概的意思就是,Python 允许用户通过 --with-computed-gotos 和 --without-computed-gotos 参数在编译的时候控制要不要开启 computed goto。默认是开启的。同时编译期间还会判断当前使用的编译器支不支持 computed goto(GCC, Clang 支持,MSVC 不支持)。如果两个条件都满足,在 ceval.c 中就会启用 computed goto。

ceval.c 中的实现

首先,label 是在 opcode_targets.h 中定义,并被 include 到 ceval.c 的。

这一段 定义了 computed goto enabled 和 disabled 时的跳转行为,我把简化过的代码贴在下面:

#if USE_COMPUTED_GOTOS

/* Import the static jump table */

#include "opcode_targets.h"

#define TARGET(op) op: TARGET_##op

#define DISPATCH() { \

_Py_CODEUNIT word = *next_instr; \

opcode = _Py_OPCODE(word); \

oparg = _Py_OPARG(word); \

next_instr++; \

goto *opcode_targets[opcode]; \

}

#else

#define TARGET(op) op

#define DISPATCH() continue

#endif

嗯,简化了相当多的东西,但这能让我们更清晰地看出只有在 USE_COMPUTED_GOTOS 的情况下,DISPATCH() 才会用 goto 去跳到下一个 opcode 的 label。

下面是极简版的主循环代码:

for (;;) {

switch (opcode) {

case TARGET(LOAD_CONST): {

// 处理 LOAD_CONST

DISPATCH();

}

// 其它 opcode 省略了

}

}

先看 computed goto 未启用时,把代码中的宏展开(替换 TARGET 和 DISPATCH)的结果:

for (;;) {

switch (opcode) {

case LOAD_CONST: {

// 处理 LOAD_CONST

continue;

}

}

}

嗯,就是这样了,很直接了当的 switch...case。

再看 computed goto 启用时,把代码中的宏展开的结果:

for (;;) {

switch (opcode) {

case LOAD_CONST:

TARGET_LOAD_CONST: { // 注意这里多了一个 label

// 处理 LOAD_CONST

_Py_CODEUNIT word = *next_instr;

opcode = _Py_OPCODE(word);

oparg = _Py_OPARG(word);

next_instr++;

goto *opcode_targets[opcode];

}

}

}

可以看到,在处理完 LOAD_CONST 之后,通过 next_instr 拿到下一个 opcode,然后执行goto *opcode_targets[opcode]; ,跳到另一个由 opname 命名的 label(参见 opcode_targets.h ),比如 TARGET_STORE_NAME:。这里的关键点在于,代码直接跳转到了下一个指令对应的 label,并不经过 switch。这也就回答了文章一开始的问题:

Python 真的是靠一个 switch 来执行字节码的吗?

答案是:

只要 Python 启用了 computed goto (比如在 Mac 和 Linux 上),字节码的执行就不依赖 switch。

顺便一提,这个功能是在 Python 3.2 中变为默认开启的:

Computed gotos are now enabled by default on supported compilers (which are detected by the configure script). They can still be disabled selectively by specifying --without-computed-gotos.

Today I read an article Under Discussion: The Performance of Python, and discussed it with some friends. I got some ideas from the discussion, so I decided to write an article.

My overall reaction to this article is: it points straight to some of the key factors that prevent Python to gain major performance improvements, thus really worth reading.

In the article, the author interviewed Python core developer Victor Stinner (VS) and experienced Python developer Julien Danjou (JD). Their names should be familiar to those who's been in the Python community for a while. Unlike some other articles that talk about the technical reasons of why Python is slow, this article focused on the efforts made and difficulties met to improve Python's performance.

To start, let me quote the part that I find most interesting:

VS: Now, there are different reasons behind Python’s slowness. The first is the official statement, which is that there is no such performance issue! Saying this is preventing us from working on the issue.

It blew my mind when I saw this. You know it, I know it, but as a core dev, you still need a bit of courage to say that in public. I appreciate what Victor said, and I'm 1000% agree with it.

JD: I agree with Victor. I don’t see a lot of people working upstream on Python who are trying to improve its performance, which is a shame considering the number of people who use the language and want better performance from it in general.

(Julien has clarified that he was referring to big companies not investing enough in Python.)

I think it's too harsh to say that. I respect the work people (especially core devs) did, and they've made Python better than it ever has been. My friend @uranusjr reacted more strongly. He said:

I don’t see why it’s a shame. If you want it to improve, feel free to contribute. Core developers don’t owe you anything.

This brings to the main topic I want to talk about today.

The mindset of "If you want it to improve, feel free to contribute" does not help

Don't get me wrong. I agree with the word. What I'm saying is, it's not helpful. I'll explain it later.

As you might know, I hosted a Python podcast in my free time, and we once invited Xiang Zhang, the only core developer from China. One thing he said in the show still strikes me until today. He said, Python's evolvement pretty much relies on 个人英雄主义 (individualistic heroism), and that's why it's so hard to make big improvements.

The real problem behind the scenes is the mindset. We're so used to relying on individualistic heroism, relying on "feel free to contribute", and almost forgot that contributing to a project as complicated as Python, is hard. Python does have a comprehensive Developer’s Guide, which is nice, but it's not enough. We need to change the mindset from "If you want it to improve, feel free to contribute" to "If you want it to improve, we'll make it easy for you to contribute". This is important because, once we build this new mindset, it is obvious that a lot more can (or should) be done other than having a good developer guide. I'll list a few that came to my mind.

Documentation

Again, it's documentation. So far I've made 4 commits to improve, but there are a lot more problems out there. Reading documentation is often the first step to start contributing. If the documentation itself is wrong or outdated, it would confuse people and scare them away. The more problems the documentation has, the less people care about fixing it, since it's "already broken", and that's the so-called "broken windows theory".

Specification

Bugs in documentation sometimes reveal a bigger problem: lack of specification. Personally, I think this is THE MOST important issue that Python needs to solve. To give an example, there is no formal definition for "value stack", but the word "stack" is used everywhere in bytecode's documentation, which represents the "value stack". Sometimes I got the feeling that the boundary between (C)Python's behavior and its implementation is vague, which makes it hard for both people to use and improve the language. Sure CPython is the reference implementation, but perhaps it can do better?

This topic is a bit beyond the scope of this article and I'm not an expert either, so I'll link to a recent discussion on Python Language Summit 2020: A formal specification for the (C)Python virtual machine.

When I was talking to Xiang Zhang, he mentioned he wish Python has gdb's functionalities built-into the language. I didn't understand why this is important, until one day I tried to use gdb on Mac to trace code execution in Python's VM. It was painful, and I didn't get it to work. Later I found that gdb has quirks on Mac and does not work well in certain cases. If Python provides this functionality, then perhaps Mac and Windows users are more likely to contribute?

Xiang also mentioned that he wish Python has hooks that provide memory usage and thread information. Could this be the consequence of lacking a formal specification, or simply because the community lacks interest in them? I don't know. But I do know these tools (if they exist) will not only benefit application developers but Python contributors as well.

Roadmap

This is the hardest topic, and I honestly don't feel qualified to talk about it. But it has been on my mind for quite a long time, so I might as well give my two cents.

If you observe the lifetime of a programming language, there are two typical trajectories. If the language failed to gain enough popularity in the first few years after it was created, it gradually fades away. If it did, often with a few killer apps or certain traits that other languages don't have at the time, it becomes "mainstream". Once it gets there, its lifespan suddenly grows from less than a few years to, say, at least 30 years, or even longer (remember the call for COBOL programmers?).

One problem arises with the long lifespan, that is, the language gradually becomes outdated. Why does this happen? Because human is progressing, errors are learned and new ideas are created. The creators of new languages learn from existing ones, avoid their mistakes, and adopt new ideas from academia and industry. Most will fail, but there are always a few who managed to create a better language. You'll probably argue that there's no language that's perfect in every way, and there are always tradeoffs, which is true. But hey, making better tradeoffs is also a technique, and people learn it as well. Meanwhile, we should not forget that old mainstream languages are also being improved constantly, but just as any other legacy system, having to keep backward compatibility slows this progress down, and even made certain improvements impossible.

Python is right at this stage. It has become one of the most popular programming languages, but some new languages are trying to take its place, little by little. It is unavoidable and we have to accept it, then take action. But how? C++ gives a great example. Take a look at this document:

Direction for ISO C++

And its abstract:

This is intended as a document updated as times go by and new facts and opinions emerge. It tries to articulate our aims for C++, the challenges faced, and some concrete suggestions for priorities.

This document differs from most papers by aiming for a global view and placing individual features in context, trying to avoid delving into technical details of individual proposals except where that “details” could affect the language as a whole or its tool environments.

To prevent this document from becoming toothless “pure philosophy,” we propose giving priority to specific areas of development and to specific proposals in those areas.

I know, people are complaining about C++'s evolvement being slow, but let's not forget C++ is probably the most complicated language with way more historical burden than languages like Python. Despite all that, I feel this kind of "long term road map" is what Python needs. Again, there are tradeoffs here. Python does not have a committee, and everybody pretty much works on its own, which allows it to move fast. However, I've seen many people say that, despite Python gaining more and more features, they don't feel like upgrading the version they've been using, because there isn't much big of a difference. Many say they'll stick with 3.6, cause that's a version with major improvements (f-string, compact dict). While I know this is not true, that recent Python versions do carry many improvements they should care, I still think it is dangerous that people start to think that way. Part of the reason, IMHO, is because Python does not have a real road map, therefore most contributors just tend to focus on low-hanging fruits. There's nothing wrong with making small improvements, but certain aspects, like performance improvements, is not small, and it can only happen if there's careful long-term planning and thinking, aka, a roadmap. A roadmap is not a magic scroll, it does not turn Python into a better language right away. In fact, it's a long process, and will probably take years before we can tell the difference. However, that's also the reason why Python needs it so urgently. Years can pass in the twinkling of an eye, if we don't do something now, in five years, the world can be totally different from what we see today.

Edit: Ruby is similar to Python in many ways, except it has a roadmap. See Matz - Ruby 3 and Beyond.

Summary

This article is way longer than I expected, so I'll do a quick summary. In our latest show, my friend who currently works in the Google New York office talked about what he thinks makes a good manager. He said a good manager should create an environment for you to work effectively. And I think that's also true for Python.

网上关于 Python block stack 的文章少得可怜,中文文章更是基本搜不着。block stack 是 CPython 中的重要概念,但其实现又比较繁琐,因此我会先跳过细节,讲一讲为什么需要 block stack。之后会贴一些链接,里面有更细致的讲解。本文假定读者对 Python 的字节码有基本了解,且针对的是 Python 3.7 版本。

作为预备知识,我们知道 Python 的解释器是基于栈的。以 BINARY_ADD 举例,这个指令把栈顶元素和与栈顶相邻的元素出栈、相加,并把结果存到栈顶。这个栈的官方名称是 "value stack",但很多地方也将其称作 "evaluation stack" 或者 "data stack"。这里显示出 Python 在暴露实现细节时的混乱——文档中并正式定义这个术语,而它却又被在文档中广泛提及,并且是总是以 "stack" 这个含糊的名字出现(都想提个 bpo 去改进文档了 >_<)。

言归正传,为什么要强调 "value stack",是为了和解释器中的另外两个栈区分开:它们分别是 call stack 和 block stack。Call stack 大家比较熟悉这里就略过了。下面正式介绍 block stack,为了能更清晰地解释其来源,我们先看一个例子:

if a == 1:

foo()

else:

bar()

作为解释器,该如何实现 if 功能呢?很简单,只要知道分支所在的位置,根据 a == 1 的结果跳过去可以了,比如我们可以用行号表示位置:

if a == 1 is True, jump to line of "foo()"

if a == 1 is False, jump to line of "else:"

解释器有代码位置的信息,它知道 line of foo() 和 line of else: 的值,因此完全可以把这两条规则直接写死。不论整个程序其它部分的代码有多复杂,这个规则都不会变。

再看这个例子:

for i in range(4):

break

foo()

请问 break 应该如何实现?是否可以像 if 一样,直接跳到 line of foo() 呢?

答案是不行,因为 break 的跳转受到程序其它部分的影响。比如我们加一个 try...finally...:

for i in range(4):

try:

break

finally:

bar()

foo()

这时程序的执行流程就变为了 break ⟶ bar() ⟶ foo()。可以看到,break 跳转是上下文相关的。如果解释器仍然想用 hard-code 的规则实现跳转,就需要对上下文进行全面分析,才能判断出跳转的位置。这会让解释器的实现变得复杂,性能开销也会很大。

为了解决这个问题,CPython 引入了 block stack。用一句话概括就是,CPython 使用 block stack 应对控制流和异常处理带来的跳转不确定性,它让解释器利用运行时信息和少量的固定规则即可实现正确跳转。我将解释 block stack 在这个例子中是如何发挥作用的,为了方便读者理解会将一些地方简化。

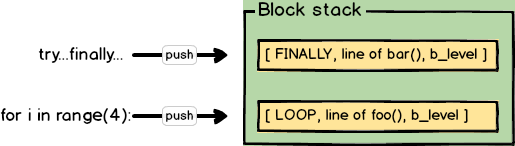

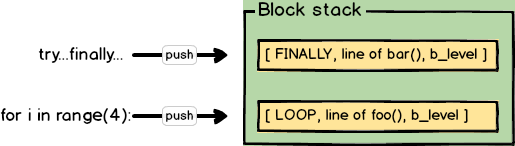

首先,解释器在遇到循环 for i in range(4) 会 push 一个 Block(LOOP, line of foo(), b_level) 的结构到 block stack。我们称这个结构为 block,因为它对应了一个代码块。block 包含的第一个重要信息是它的类型,此处为 LOOP,表示它是一个循环结构。第二个重要信息是下一个指令的位置,即“当代码退出 block 的时候,接下来要执行的代码在哪”,显然,这里是 line of foo()。

对于 try...finally... 同理,Block(FINALLY, line of bar(), b_level) 会被 push 到 block stack。这时候的 block stack 如下图所示。

现在程序执行到了 break,解释器会根据当前执行的操作和栈顶 block 的类型判断它接下来要做什么。比如这里,当前执行的操作是 break,且 block 类型是 FINALLY,操作就是弹出栈顶元素 Block(FINALLY, line of bar(), b_level),并跳转到该 block 的下一个指令继续执行,也就是开始执行 bar。

当 bar() 执行完之后来到 finally 的结尾,解释器发现当前 block 的类型是 LOOP,且当前执行的操作是 break(这里比较 tricky,break 会被 push 到 value stack 上,所以现在还是可以读取的。我们只要知道解释器仍然知道当前的操作是 break 即可),则同样弹出栈顶元素 Block(LOOP, line of foo(), b_level),并跳转到该 block 的下一个指令 line of foo() 继续执行。此时 block stack 已被清空。

这里面有非常多的细节,我们可以先不去深究。关键是理解一点,即有了 block stack 之后,解释器只需要根据运行时的信息(当前执行的指令)以及先前 push 到 block stack 上的元素的信息(类型、下一个指令的位置),加上一些规则,就能够实现正确跳转。除了上面提到的几个 statements,try...except, continue, with 的实现都涉及 block stack,原因也是显而易见的。

本文仅为介绍 block stack 的概念,有意忽略了很多细节。因此下面列出一些材料,希望做深入了解的读者可以前往阅读。